Gazinteract.

Abstract

What is

Gazinteract?

I wanted to explore into how normal screen-based interactions could be made non-tangible with gaze-based gestures. Moreover, I wanted to fully consume the capacity of normal video capturing devices like laptop webcams rather than expensive eye trackers and other advanced and bulky devices. In spirit of doing this, I developed a very basic exploratory application called Gazinteract that captures on real-time webcam feed and processes it to perform actions on a sample image.

Gazinteract comes from Gaze and Interact.

Research

Why eye gaze

based interaction?

The eye has a lot of communicative power. Eye contact and gaze direction are central and very important cues in human communication. Eye tracking refers to the process of tracking eye movements or the absolute point of gaze (POG)—referring to the point

the user’s gaze is focused at in the visual scene.

Physically handicapped people who might find it complex to interact with the screens

or other interfaces, could easily adapt to this form or non-tangible interaction.

The aim is to experiment with more natural non-tangible ways to interact with the technology. People were quite reluctant when the first touchscreens came into being. But today it is the

most natural and in a way very intuitive and also direct method to interact with screen based devices. Likewise for voice-based interaction. Eye-gaze based interaction has the potential for being

the next big thing after touch and voice based interactions.

This project explores the utilization of eye attributes to offer several screen gestures to experiment with like zooming, scrolling

or clicking.

What is to be

detected?

- Location of the eyes.

- Directional focus of eye-gaze.

- Aspect ratio of the eye opening.

Solution

How do I locate the eyes?

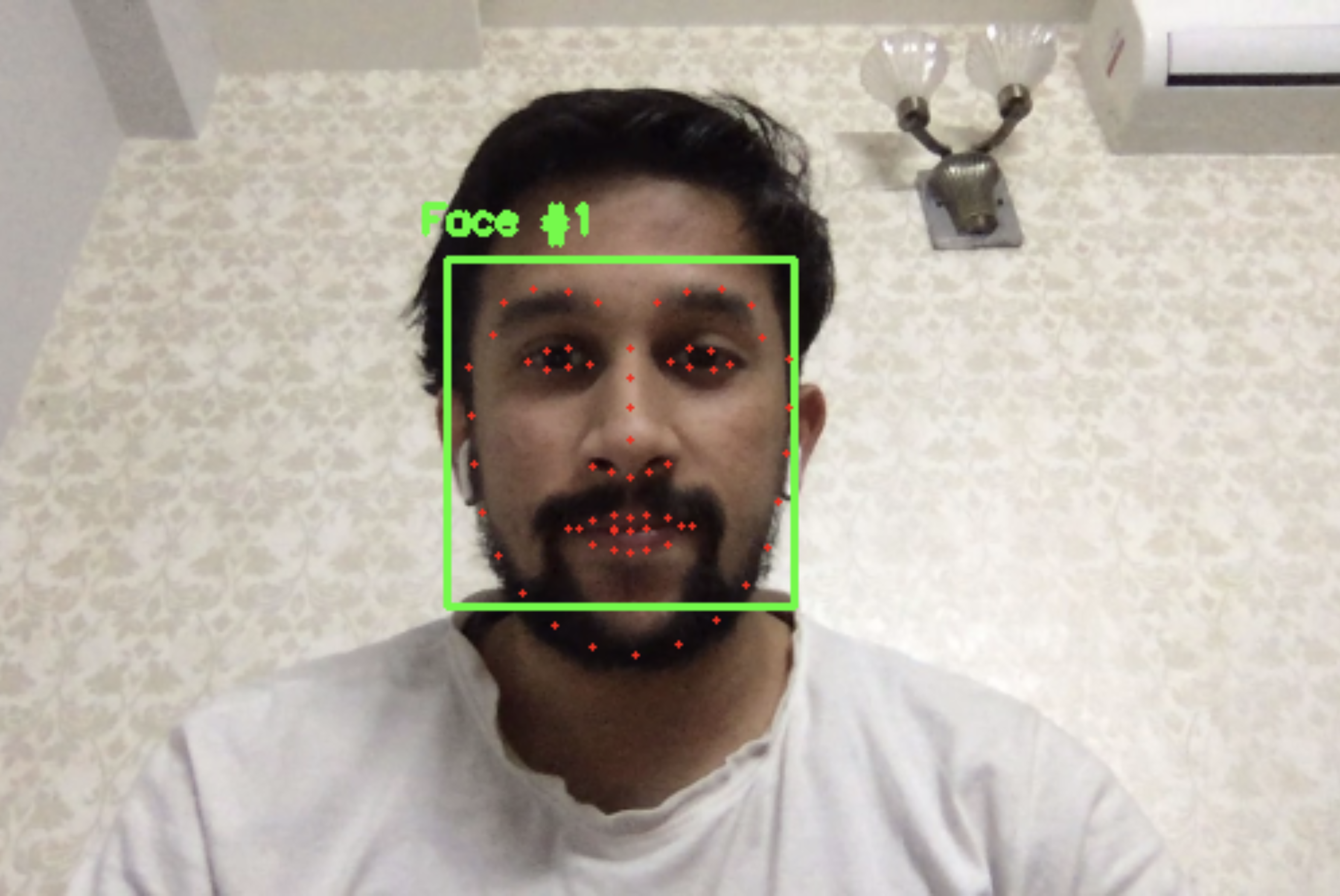

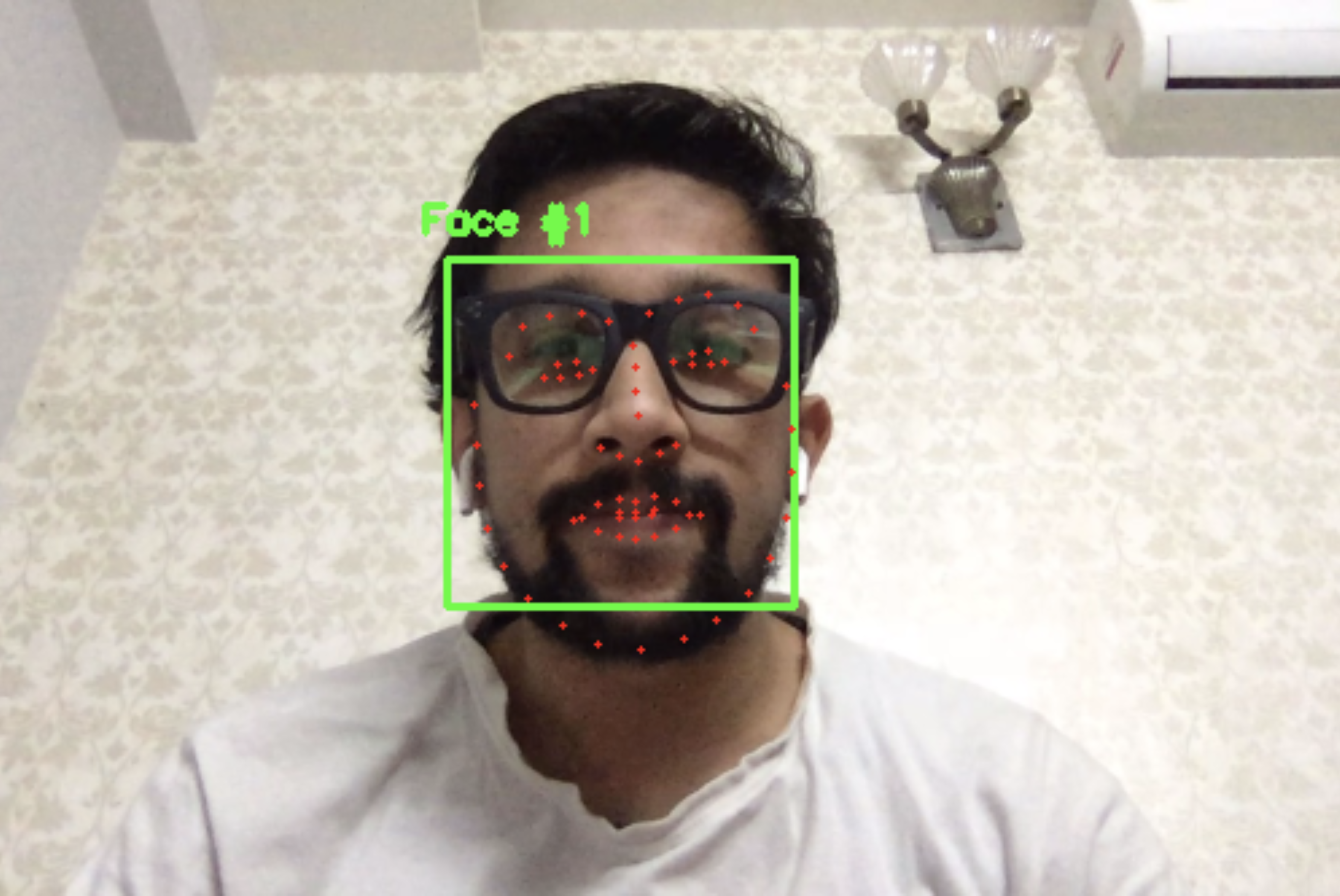

- For locating the eyes, I used the technique called facial landmarks detection. This basically means localizing a face in the video frame and detecting the facial structures on Face ROI. I referred to this amazing article for doing this.

- After detecting the facial feature points, I collected the ones which corresponded to the eyes and then averaged the positional measurements to get an inital default value.

How do I detect the focus direction?

- In order to track the sight detection, I used the approach offered by Webgazer.js here.

- This approach has been precise with an approximate error of 104 pixels which makes it suitable for carrying out normal eye tracking for moderate objects.

How do I detect the opening of the eye?

- Out of facial landmark features obtained, each eye is represented by 6 (x, y)-coordinates, starting at the left-corner of the eye (as if you were looking at the person), and then working clockwise around the remainder of the region. From these 6 coordinate values, I compute EAR or Eye Aspect ratio which is the ratio of

- The eye aspect ratio is approximately constant while the eye is open, but will rapidly fall to zero when it is closed. This way it becomes comparatively easy to just detect the opening amount of the eye by just computing the equation rather than using some image processing techniques.

Prototype

In the following prototype, firstly, average eye aspect ratio (EAR) is calculated for the 3 states of eye opening (Enlarged, Normal, Shrunken) and then depending upon the current value of EAR, zooming is performed on the picture on the left.

Reflections

Utility.

- This project was to explore new ways of interacting with screens. This was developed to be used on common webcams available almost on every computer.

- This project introduces a non-tangible intuitive way of interaction with the screen content which allows normal screen gestures such as Zooming, scrolling and clicks among others to be carried out with the help of eye gestures.

Accessibility.

- Physically handicapped people who are incapable of performing physical touch interaction with the screens wouls find this as a convenient solution.

- Screens in the public places such as Bus stop dynamic posters, metro/subway train stations' ticket-vending-machines, ATM machines and others house several germs which are transmitted due to multiple subsequent touches from users. Eye based interaction with these screens would eliminate such risks, particularly in this COVID-19 Pandemic.